Vibe Coding in The World's Largest Hackathon: Building GPXTrack.xyz

I got pretty excited when Bolt.new announced the World’s Largest Hackathon. I saw not only an opportunity to build something cool, but also – and perhaps more importantly – an opportunity to gain experience building something with AI tools. A hackathon’s the perfect place to explore the capabilities of AI tools because it let me lean in to vibe coding experience. I used the AI for many more kinds of tasks that I normally would, and I let it take control of things much more than I normally would. As a result, I think I was able to discover and push the limits of AI in my own workflow much faster than I otherwise would have, so it was an excellent learning experience!

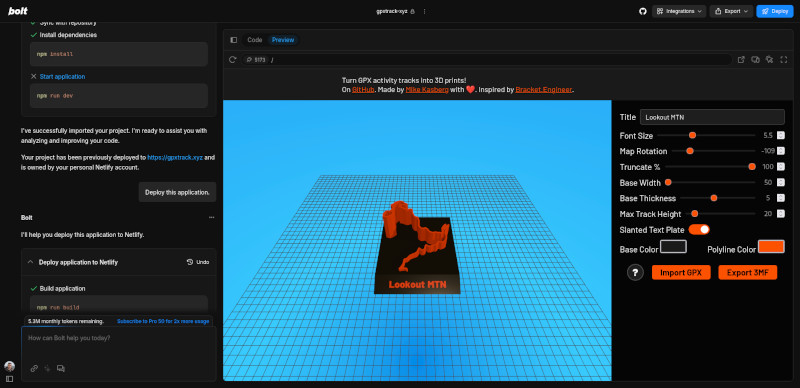

Bolt.new is StackBlitz’s new AI-first coding platform that lets you use AI chat and codegen to build web applications without leaving the browser. And while I’m not completely sold on the in-browser IDE, it’s actually grown on me a little over the course of the hackathon. I was happy to give the platform a try since Bolt.new was offering 10M tokens to anyone who wanted to participate in the World’s Largest Hackathon. Fundamentally, the tool is just an agent that’s using Claude to generate and edit code, so I think many of the approaches and techniques I’ve picked up are equally applicable to Bolt.new and to other agents, like Claude Code.

Inspiration

Recording the 3D printing show today pic.twitter.com/op9qzyvyn6

— Wes Bos (@wesbos) April 15, 2025

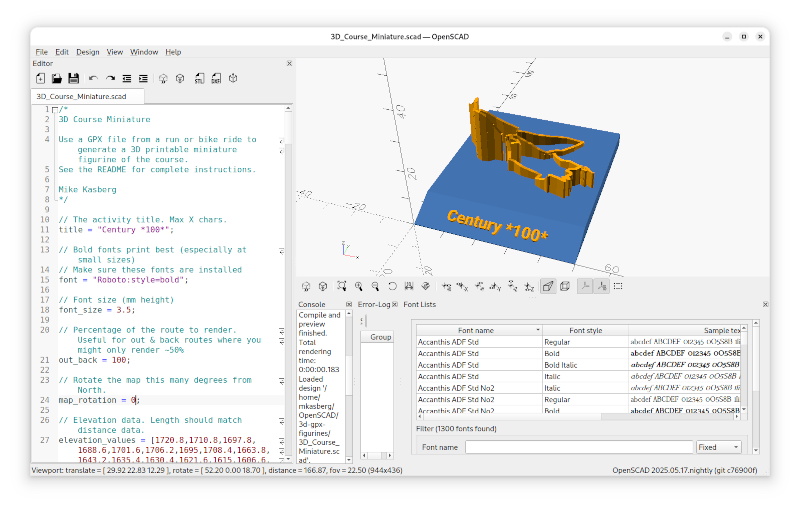

Several months ago, Wes Bos was getting into 3D printing and sharing his journey on X. I’ve been interested in 3D printing for a while, so I was eager to follow along. As Wes got deeper into 3D printing, he was searching for ways to integrate programming with 3D printing and found ManifoldCAD, a tool to programmatically generate 3D models using javascript. I think Wes was attracted to ManifoldCAD for many of the same reasons that I like OpenSCAD. I’ve been pretty happy using OpenSCAD for a long time, but I was a little surprised at all the things Wes was able to do when I saw Bracket.Engineer for the first time! Wes took ManifoldCAD to the next level by using it as a library, in combination with Three.js, to deploy a website that lets you customize a parametric model in your web browser as a GUI, with the complete capabilities of javascript. I thought this was awesome, and the ability to easily integrate code from another language was a capability that I’d been missing in OpenSCAD!

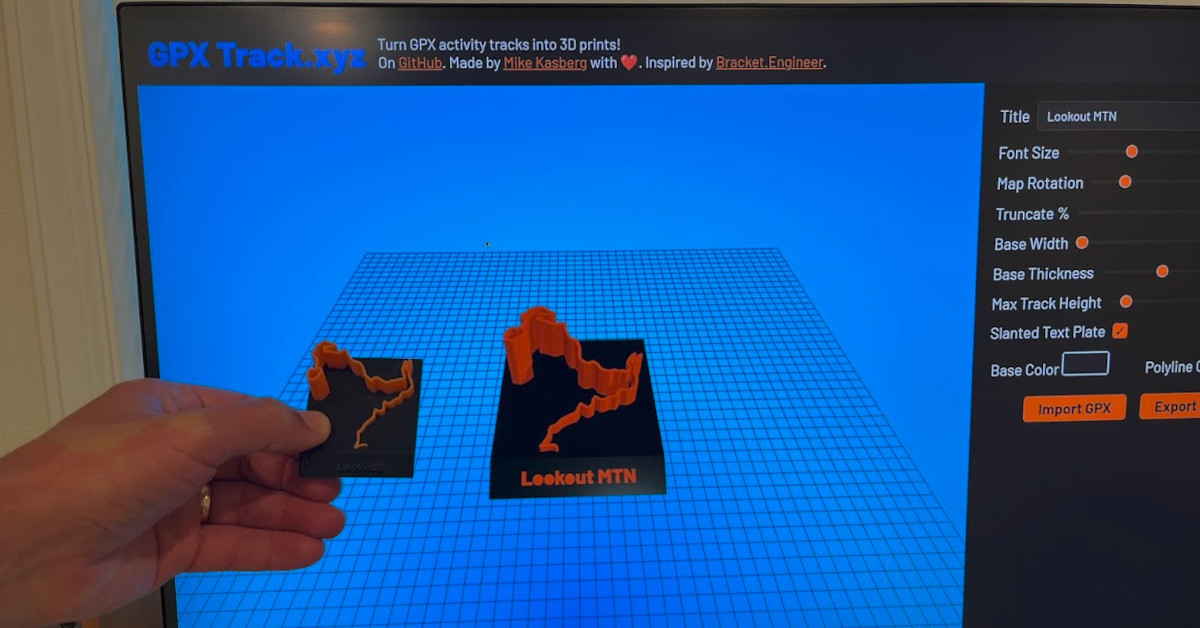

More than a year ago, I worked on a project to generate a 3D printable model from a GPX track using OpenSCAD. Because I needed a more powerful programming language than OpenSCAD itself to import data from a GPX file, I wrote some code in Ruby that would parse the GPX file and render OpenSCAD code using a template. The approach worked, and is still available at github.com/mkasberg/3d-gpx-figurines, but it was an ugly approach and I wished there was a cleaner way to do it. I realized when I saw Bracket.Engineer that the tight integration Wes had achieved between JS code and a ManifoldCAD model would provide a much cleaner way to generate a 3D model from a GPX activity track, and the World’s Largest Hackathon seemed like the perfect place to experiment with the approach. It was a perfect combination of events!

The AI Prompt Learning Curve

Without initially knowing what Bolt.new was capable of, or even what kind of prompts worked best, I took a naive approach and dove right in. I forked the Bracket.Engineer repo to use as a starting point, and started thinking about all the work to be done. First and foremost, I needed to get my OpenSCAD GPX path model to work in the ManifoldCAD library, or this whole project would hit a dead-end very quickly! I tried to just zero-shot it, with relatively little context, by pasting the entire OpenSCAD file into the prompt and adding a sentence or two asking Bolt to port the code to ManifoldCAD. And when that was done, I wrote another short prompt asking it to update the code to reference my new GPX model instead of the bracket model from the original repo. To my surprise and delight, it did a reasonably good job! It didn’t work perfectly on the first attempt, but it got close enough that I knew this was going to be possible!

Although my first prompt went surprisingly well, I quickly got a taste of the

many ways AI can fail as I worked with the AI in frustration to make half a

dozen different flavors of “fix it” commits. Most of them only partially worked,

and some didn’t work at all. There were a few fundamental problems with the

ported 3D model (rendering in broken ways) that the AI seemed unable to fix, so

I had to dig into myself and do some real (human) debugging work. I soon

realized that the AI had repeatedly made the same error with order of operations

as it was porting code from OpenSCAD to JS. OpenSCAD is a functional-inspired

language, and commonly uses code like translate(rotate(cube)). The LLM had

ported many of these operations to JS like cube.translate().rotate(), which is

not the same. (Order of operations is important when you’re translating things

and rotating them about the origin.) I ended up fixing several of these errors

manually, which was actually reasonably quick once I recognized the failure

pattern.

I ended my first day of “vibe coding” optimistic about the possibilities, but also frustrated about the reality that the AI had written lots of bugs and left other tasks incomplete, and the process to fix the bugs and get things working was incredibly slow so far. Before starting the next day, I had time to think about the approach I’d taken on the first day, what had worked, and what had not. I noticed that Bolt was actually creating a new git commit every time I asked it to change something (which led to a lot of “fix something” commits). I also explored the Bolt UI more deeply and noticed that it supported working on different branches. For my second day of vibe coding, I decided to try doing all my work on a branch and opening a pull request with the changes, which would give me a better way to review code before it hit my main branch.

AI Troubleshooting Techniques

OpenSCAD supports 3D text, and ManifoldCAD does not, but I knew that 3D text was an important part of my project and I wanted to find a way to implement it. I started a new branch in Bolt. I had no idea how we were going to make this work, so I started asking the LLM questions. I think this was my first use of the “Discussion” mode in Bolt. I asked it if there were any common techniques for using 3D text in ManifoldCAD. (There weren’t, as ManifoldCAD doesn’t have that feature.) So I asked it, at a higher level, what the most common approach was to create 3D text. It outlined a process at a high level that exports contours from a 2D font and extrudes those contours into a 3D model. Then I asked it to make a plan that would allow us to use extruded font contours in our own project. It made a fairly detailed plan to use opentype.js to extract contours from a web font, send the contours to our model in the right format, and extrude them as 3D text. It was working well above my knowledge of fonts, contours, and 3D rendering here. I could have maybe figured all this out on my own, but it would have been days of reading arcane things about how fonts work. I learned a lot quickly just by watching the LLM think, and I asked it to implement the plan.

It wrote a lot of code, and it seemed mostly correct to me at first glance.

There were a couple errors, and the AI was able to work through them and fix

them. But then the errors were gone, and we were stuck. Lots of code that I

didn’t really understand, and no errors, but no 3D text. I was stumped, and I

took a break to think. I didn’t want to debug all this font code myself, but the

AI was unable to make progress. Asking it to fix the problem, without a clear

indication of what was wrong and no error message, made it start hallucinating

issues and breaking things further as it tried to fix the wrong thing. What

would I do, if I were the AI in this situation? I’d want logs. Back to

basics. Print statement debugging. And that’s exactly what I asked the AI to do.

I told it that we have a problem because there are no errors, but the text isn’t

showing up, and we need to use console.log to validate our assumptions.

I was blown away by the results. It added beautiful log messages in more than a dozen different places throughout the complicated code it had just written, and logged exactly the right thing in a nice readable form at every location. It probably would have taken me an hour to do that myself, and the logs wouldn’t look as nice. This was great! I started up the app, got dozens of lines of logs, and was just starting to get sad about reading through them all when it occurred to me to just try pasting all the log output back to the LLM. So I did. I pasted more than 50 lines of the logs it had just written itself and I asked it to interpret the results. And I was blown away again. It drew correct conclusions from all of it’s log messages, showed its reasoning in bullet form, and stated quite confidently that we had correct contours all the way through our pipeline but weren’t getting 3D shapes and it was probably because our “winding order” was backwards. Okay, I told it to fix the winding order. It added a .reverse() in the right spot, and I had mostly-working 3D text in ManifoldCAD! I was ecstatic! All this work had been done on a branch, so I asked it to remove the logs it had added and clean up the code for a PR. I didn’t closely review all of the new font-rendering code, but I knew it worked before I merged it because I had the opportunity to test it, and that was good enough for me!

Moving Faster

As I used the LLM more, I became much more confident with it. I was developing a sense of its abilities. I knew what it did well, and I knew what it would struggle with. I started crafting better prompts to guide it around the parts I knew would be tricky. My prompts grew more precise, and the generated code became more precise as a result. One trick I learned was that it always helped to discuss the plan before generating any code. With this technique, I could be a little lazy and provide a mediocre prompt with a sentence or two about what we needed to do, and the LLM would generate a short paragraph and several bullets about what needed to happen. If the plan was bad, I had a chance to revise it before we started going down the wrong path in code. And if the plan was good, the LLM always performed better edits using its own detailed plan than it did using my short and vague description. Using this technique, I got to the point where on several occasions the LLM generated a PR on the first try that I was able to merge with no edits! And it felt awesome, because I knew I was moving fast. When things went smoothly, I could generate, review, and merge a PR in minutes that would probably have taken an hour or more without AI help.

The confidence I gained solving the font problem and the other techniques I’d acquired along the way made me ambitious enough to try tackling bigger problems. I had the LLM move our model generation to a web worker to improve the performance and got great results! If I weren’t using an AI code assistant, I might not even try that refactoring – I’d have needed to spend a lot of time learning new things and fixing problems that were difficult to debug, and it probably wouldn’t seem worthwhile to me since the performance without this optimization wasn’t unbearable. But with AI help, I felt like I’d be able to try something quickly to see if it would work, even using technologies I didn’t have a lot of experience with. (I initially tried a different approach, and discarded it pretty quickly when it wasn’t working well. AI enabled me to experiment very quickly, and pivot very quickly when it wasn’t working.)

Looking Ahead

I’m really happy with gpxtrack.xyz! (And if you’re a runner, hiker, or cyclist, you should go check it out!) Not only did I learn a ton about building web applications with AI, but I built something really cool along the way. It’s open source on GitHub, so you can check out the code if you’re interested!

At the end of this experience, what I think I’m most excited about is seeing AI lower the cost of trying things. Adam Wathan has already shared an example of a project he and his team were able to finish that they might not have even tried without AI help. The future is exciting, but I think LLM technology is still so new that most engineers using it have no idea what it’s capable of. I think the fastest way for us to get there is to build new things and try new things, and the World’s Largest Hackathon was a great way to do that. Some of my most important takeaways are:

- Don’t let the AI wander too much on its own. It’ll start hallucinating things, duplicating functionality, and doing other things that are terrible for the long-term health of a codebase.

- To prevent that, review the generated code regularly – either right when the AI generates it, or with a PR, or with some other process.

- Discuss problems and make a detailed plan before writing code. Let the AI write a detailed prompt for itself. The AI is better at writing prompts for itself than you are, and this gives you an opportunity to review the plan before it’s done in code.

- AIs are great at troubleshooting if they have the tools and context they need. Let them add logs, like a human would.

- AIs shift costs around to make things like experimentation much cheaper (in terms of developer time) than they otherwise would have been.

AI technology continues to evolve so rapidly that it can feel hard to keep up, but I’m excited to see what the future holds!

About the Author

👋 Hi, I'm Mike! I'm a husband, I'm a father, and I'm a staff software engineer at Strava. I use Ubuntu Linux daily at work and at home. And I enjoy writing about Linux, open source, programming, 3D printing, tech, and other random topics. I'd love to have you follow me on X or LinkedIn to show your support and see when I write new content!

I run this blog in my spare time. There's no need to pay to access any of the content on this site, but if you find my content useful and would like to show your support, buying me a coffee is a small gesture to let me know what you like and encourage me to write more great content!

You can also support me by visiting LinuxLaptopPrices.com, a website I run as a side project.

Related Posts

- Hacktoberfest 2017 15 Oct 2017

- Hour of Code 2017 09 Dec 2017

- Solving Wordle with Programming 11 Jan 2022